The New Fragility of “Accurate” Data

Every company claims to have accurate data. But “accurate” is a moving target when your market can reinvent itself overnight. Subsidiaries spin up. Divisions split off. A single funding event can birth an entirely new buying center.

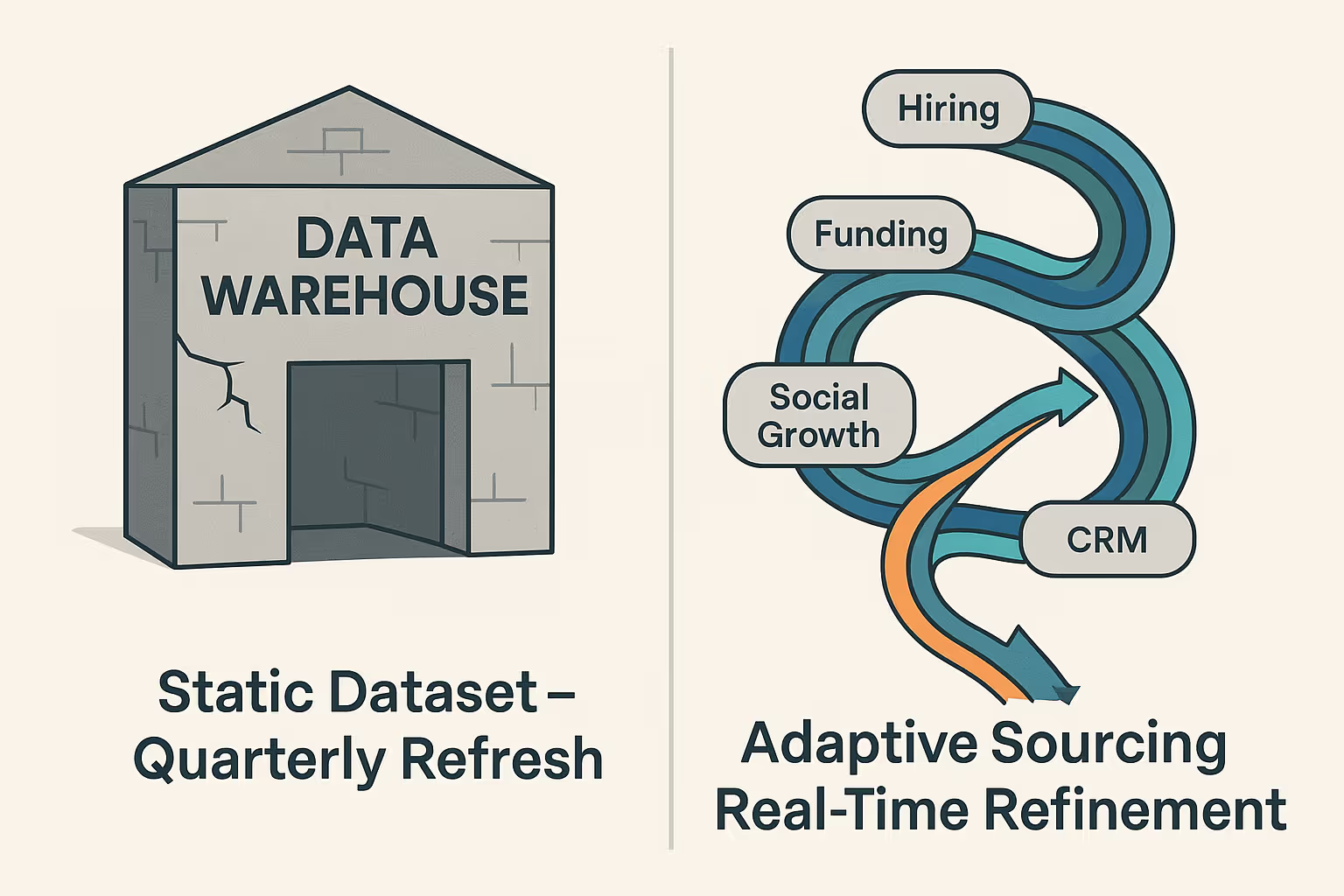

In the industrial age of data—think ZoomInfo, Apollo, or Dun & Bradstreet—accuracy was measured by volume and update cadence. Quarterly refreshes. Biannual verification. Those were considered fast.

But the modern GTM engine doesn’t move quarterly. It moves continuously. And that means your dataset has to breathe with your market—not lag behind it.

Data as Evolution, Not Inventory

LeadGenius was built around a contrarian idea: that no two go-to-market motions are identical. What works for Avalara’s expansion into EMEA won’t work for a cybersecurity firm scaling SMB outreach in the U.S. Each GTM strategy deserves its own data DNA.

That requires dynamic sourcing—data that can adjust, refine, and evolve in real time. Hiring spikes, funding rounds, tech stack changes, or new product launches aren’t “signals” to log quarterly; they’re the heartbeat of an adaptive model that learns as your business does.

This is the difference between data as inventory and data as evolution. Inventory gets stale the second it’s stored. Evolution compounds in value with every iteration.

Why This Matters More Now—Thanks to Tools Like Clay

Five years ago, adaptive data was a philosophical ideal. Today, it’s a practical necessity—and tools like Clay have made it more visible than ever.

Clay lets operators assemble and automate data flows from dozens of sources—social, web, CRM, hiring feeds—almost like Lego blocks. It exposes what GTM teams have always intuited: the problem was never data scarcity; it was static data architecture.

But here’s the catch: even Clay’s flexibility only amplifies the underlying truth. The power isn’t in the tool—it’s in the model you build. Clay may let you automate enrichment, but it’s still only as intelligent as the sourcing logic you feed it.

LeadGenius takes that principle and industrializes it—scaling bespoke data sourcing across millions of companies and hundreds of signals, continuously refreshed, human-verified, and tailored to your evolving ICP.

The Death of “One-Size-Fits-All” in GTM

In B2B go-to-market, homogeneity is death.

When everyone pulls from the same static dataset, every campaign, cadence, and ICP model starts to look the same. You get over-saturation, channel fatigue, and the eerie sense that every outbound motion is hitting the same tired inboxes.

Customization breaks that loop. It restores precision, agility, and relevance—qualities that machine-scale marketing can’t fake.

In a world where algorithms increasingly optimize for themselves, data customization is how human strategy reasserts control.

From Static to Symbiotic

Static data describes the world as it was. Adaptive data predicts the world as it’s becoming.

That’s the leap GTM leaders now have to make—from asking, “How big is our database?” to “How alive is our data?”

Because in the next era of growth, it won’t be the companies with the most data that win. It’ll be the ones whose data learns fastest.