We’re watching something subtle but profound unfold in the world of go-to-market operations. The emergence of generative AI has unlocked a new mode of research — not just automating rote data pulls, but offering something closer to synthesis, interpretation, even judgment.

But is any of it good enough?

I wanted to find out. So I ran a simple but telling experiment: test five of the leading generative AI models on a single, high-context B2B research task.

The goal wasn’t to summarize websites or repeat boilerplate. It was to see which model — if any — could reason about a complex, fast-scaling company with minimal surface-level data.

The company I chose: Astronomer, a workflow orchestration platform that unfortunately became famous for "kisscam gate" more so than for being tech unicorn deeply embedded in the modern data stack. Not the easiest research target if you want to avoid tabloid noise and us weekly articles. Their site is polished but vague. They’re mentioned on HackerNews more than TechCrunch. Their relevance is obvious to data engineers, but not to most sales teams.

Perfect.

The Challenge: Real B2B Research, Not Just Lookup

Each AI model got the same prompt:

“Research Astronomer. Identify company-specific pain points that suggest they may be encountering operational bottlenecks, data workflow constraints, or pipeline inefficiencies. Consider team size, product complexity, go-to-market model, and recent company activity.”

The task was intentionally open-ended. The best models wouldn’t just find facts — they’d identify patterns, draw inferences, and surface insights that aren’t explicitly stated.

The Models in Play

- ChatGPT Plus (o3 model)

- Claude Pro (Opus 4)

- Gemini 2.5 Pro

- Perplexity Pro

- Grok 4

I evaluated them using three core criteria:

- Reasoning: Could the model connect disparate signals into something coherent?

- Web Depth: Was the analysis grounded in recent or hard-to-surface sources?

- Contextual Fluency: Did it uncover relevant dimensions I hadn’t explicitly asked for?

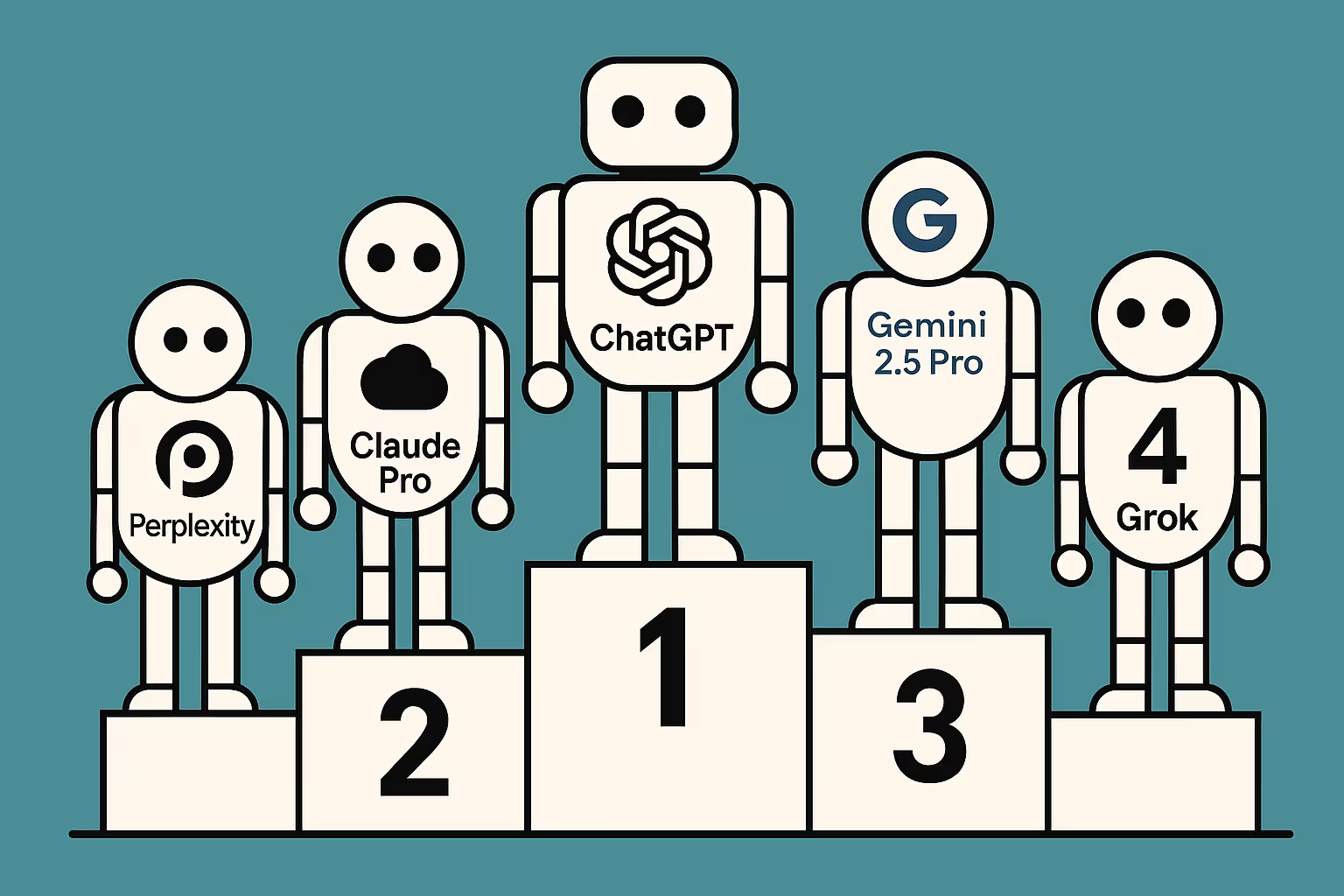

The Rankings

1st Place: ChatGPT o3

Of all five, ChatGPT was the only model that didn’t just answer the question — it engaged with it.

It recognized that Astronomer operates in a dense, competitive segment (workflow orchestration), where much of the pain isn’t outwardly visible. But it noted that the company’s heavy investment in hybrid Airflow deployments — bridging cloud and on-prem — likely leads to internal inefficiencies and data governance complexity.

It inferred that Astronomer’s go-to-market motion relies heavily on educating a technical user base, suggesting potential friction in onboarding and adoption. It even referenced a partnership with dbt Labs as a sign that Astronomer is attempting to position itself as a control plane — a sign of growing product sprawl.

In other words, it reasoned. Not just what is, but what that means.

Verdict: This wasn’t a summary. It was insight.

2nd Place: Gemini 2.5 Pro

Google’s Gemini was impressive in breadth. It pulled recent posts from Astronomer’s engineering blog, quoted hiring announcements, and surfaced GitHub repo velocity. Its knowledge graph is clearly deep.

But Gemini struggled to move from data to diagnosis. It could find the puzzle pieces, but it didn’t quite assemble them. When it mentioned a surge in developer-focused webinars, it didn’t ask why. When it highlighted new connectors for Airflow, it didn’t link that to platform complexity or customer segmentation.

Verdict: A brilliant researcher. A hesitant analyst.

3rd Place: Claude Opus 4

Claude’s response was eloquent and structurally sound — but somewhat speculative and dated. Without live web access, it relied heavily on 2023 data. It made intelligent guesses about Astronomer’s position in the ecosystem, but lacked the recency and signal fluency that the others demonstrated.

Its most interesting insight was subtle: it framed Astronomer’s dual audience — platform engineers and data scientists — as a potential GTM challenge, hinting at messaging fragmentation. A sharp take, but not enough to elevate it.

Verdict: Elegant, but not current.

4th Place: Perplexity Pro

Perplexity, once a favorite for live research, underdelivered. It surfaced recent articles and documentation, but read more like a curated RSS feed than a strategic analysis.

Its coverage was wide but shallow. It listed Astronomer’s enterprise features, its VC funding, its OSS roots — but never connected the dots. No commentary on technical debt. No speculation about GTM friction. No synthesis of team structure with platform breadth.

Verdict: A good tool for research assistants. Not strategists.

5th Place: Grok 4

To put it simply, Grok is not built for nuanced B2B research. The response was generic, repetitive, and lacked any contextual understanding of the modern data ecosystem. It missed key shifts happening in open-source orchestration, failed to recognize Astronomer’s positioning around hybrid deployments, and hallucinated at least one customer quote.

Verdict: Fun for general use. Not viable for GTM research.

What This Tells Us About the State of AI for GTM

AI is not a silver bullet for account research — but it’s getting close to being a force multiplier. When models like ChatGPT o3 can move beyond summarizing and into strategic reasoning, they begin to challenge the assumptions of how we gather account intelligence in the first place.

In the past, sales research meant relying on static firmographics or scraping headlines. Now, it’s about layering multiple signals — product usage trends, job openings, ecosystem shifts — and interpreting them dynamically.

The best models don’t just find answers. They build hypotheses.

What This Means for Revenue Leaders

Three reflections worth sharing:

- AI is only as good as the framing. The prompt matters. Vague questions get vague answers. Thoughtfully structured prompts yield surprisingly sophisticated outputs.

- Signal density matters. Even the best AI can’t invent insight out of thin air. For companies like Astronomer, where public data is limited, you need rich contextual signals — hiring, partnerships, tech stack, role evolution — to generate meaningful narratives.

- Interpretation is the new intelligence. Data is no longer the differentiator. Insight is. The ability to tell a coherent story from messy, partial signals is the skill — and increasingly, the product.

The Takeaway

The most useful AI isn’t the one that retrieves the most data. It’s the one that reasons with the data it has.

ChatGPT o3 is leading that charge. Not because it knows more, but because it thinks better — or at least, it mimics reasoning well enough to be valuable.

If you’re a marketing or sales leader trying to understand where your next customer’s friction points are — or a data vendor trying to decide which accounts to prioritize — the future doesn’t belong to those with the biggest database.

It belongs to those who can synthesize and operationalize.