The Problem You're Solving

Your CRM is full of contacts. Your data provider gives you company-level tech stack visibility. Your sales team still can't answer: "Does this person actually touch the systems we sell into?"

If you're running RevOps for a vertical SaaS company or developer tool, you've hit the ceiling of what generic data infrastructure can do. Your product team builds for specific personas using specific tools. Your GTM stack treats everyone with the same job title as interchangeable.

That gap compounds every quarter.

Why Generic Data Breaks Down for Technical GTM

Standard enrichment gives you:

- Job title

- Company size

- Industry code

- Company-level tech stack

What you actually need:

- Technical role function (not just title)

- Individual tool ownership and usage

- System-level responsibilities

- Workflow context

Example: Three "Senior Engineers" at the same company might be:

- Frontend developer (React, Next.js) - not your ICP

- Data platform engineer (Airflow, Snowflake) - prime ICP

- Mobile engineer (Swift, Kotlin) - not relevant

Generic data sees one segment. You need three different plays.

Contact-Level Technographics: The Core Architecture

Company-level technographics:"This account uses Kubernetes, AWS, and Datadog"

Contact-level technographics:"Sarah owns the Kubernetes infrastructure, commits to Helm charts weekly, and influences monitoring tool decisions"

This shifts your data model from accounts to individuals with verified technical context.

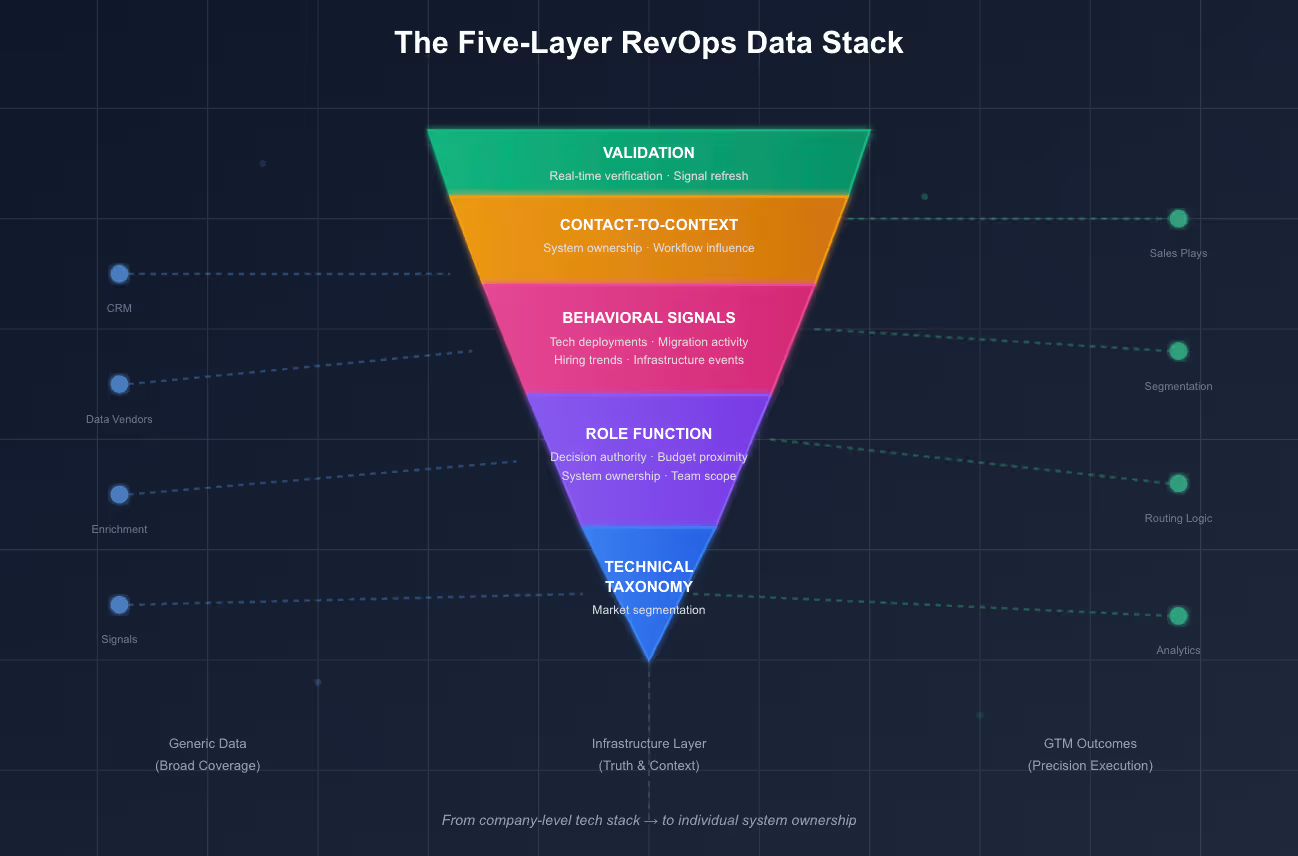

The Five-Layer Data Stack You Need to Build

Layer 1: Technical Taxonomy Design

Stop using vendor-provided industry categories. Build your own taxonomy that maps to how your market actually segments itself.

For developer tools:

- DevOps vs Platform Engineering vs SRE

- Data Engineering vs Analytics Engineering vs MLOps

- Application Security vs Infrastructure Security vs GRC

For vertical SaaS:

- Role-specific workflows within your vertical

- Tool categories by use case, not vendor

- Decision-making hierarchies

RevOps deliverable: A documented taxonomy that your sales, marketing, and product teams all reference. This becomes your source of truth for segmentation, routing, and reporting.

Layer 2: Role Function Mapping

Title standardization isn't enough. You need functional responsibility mapping.

Build fields for:

- Technical decision authority (recommender, evaluator, economic buyer)

- System ownership (what infrastructure they control)

- Budget proximity (do they own it, influence it, or just use it)

- Team size and scope (managing 5 people vs 50)

Implementation note: This requires custom object creation in your CRM or data warehouse. Don't try to shoehorn this into standard contact fields.

Layer 3: Behavioral Signal Architecture

Move beyond intent data. Build operational signal tracking.

Signal categories to instrument:

- Tech stack changes (new deployments, migrations, version upgrades)

- Team composition changes (hiring for specific roles or skills)

- Infrastructure events (security incidents, compliance deadlines, scale milestones)

- Open source activity (contributions to relevant projects)

- Funding + hiring + stack combinations

Data flow design:

- Signal detection (via data partner, scraping, or third-party feeds)

- Signal validation (automated + manual QA)

- Signal scoring (relevance to your ICP criteria)

- Signal routing (to appropriate GTM motion)

- Signal decay modeling (when does a signal stop being relevant)

Layer 4: Contact-to-Context Linking

Every contact record needs to answer: "What systems does this person touch, and what outcomes do they care about?"

Required context fields:

- Primary systems owned/managed

- Workflow influence map (which processes they impact)

- Business metrics they're measured on

- Technology dependencies

Example populated record:

Name: Alex Chen

Title: Staff Platform Engineer

Company: Acme Corp (Series B, 180 employees)

Technical Context:

- Manages k8s clusters for product engineering team (65 engineers)

- Owns CI/CD pipeline (GitHub Actions + custom tooling)

- Influences: build performance, deployment security, infra costs

- Reports to: VP Engineering

- Budget influence: Recommender for dev tools, evaluator for infrastructure

Recent Signals:

- Team grew 40% in last 6 months

- Posted job req for "Platform Engineer - Security" 2 weeks ago

- Company announced SOC 2 initiative last quarter

This is searchable, filterable, and reportable data. Not notes fields.

Layer 5: Data Validation and Refresh Infrastructure

Technical markets move fast. Your data architecture needs to account for drift.

Build processes for:

- Job change detection and contact reassignment

- Tool usage verification (is this signal still valid?)

- Org structure updates

- Champion tracking across role changes

- Technology sunset/adoption detection

Refresh cadence by data type:

- Contact employment: Weekly

- Tool usage signals: Monthly

- Org hierarchy: Quarterly

- Custom taxonomy: Annually (or as market shifts)

System Architecture: How This Actually Works

Core components:

- Data warehouse (your source of truth)

- Contact master records

- Signal history tables

- Taxonomy and mapping tables

- Validation workflow logs

- CRM sync layer

- Bidirectional sync with custom field mapping

- Signal-triggered task creation

- Automated list/segment updates

- Enrichment orchestration (Clay, custom builds, or API-first tools)

- Multi-source waterfall logic

- Custom parsing and validation

- Manual review queues for edge cases

- Reporting infrastructure

- Data coverage dashboards

- Signal performance analytics

- ICP match scoring

- Pipeline attribution by signal type

How This Changes Your GTM Metrics

Old metrics:

- Total contacts in database

- Account coverage percentage

- Generic "intent signals"

New metrics:

- ICP-verified contacts by technical segment

- Contacts with active operational signals

- Coverage of tool-specific decision makers

- Signal-to-opportunity conversion rate

- Deal velocity by signal category

Implementation Roadmap for RevOps

Phase 1: Foundation (Months 1-2)

- Document your technical taxonomy

- Audit current data quality

- Map must-have vs nice-to-have contact context

- Choose data warehouse + orchestration stack

Phase 2: Infrastructure Build (Months 2-4)

- Set up custom CRM objects and fields

- Build data warehouse schema

- Implement first enrichment waterfall

- Create validation workflows

Phase 3: Signal Layer (Months 4-6)

- Integrate signal sources

- Build signal scoring model

- Set up routing and alerting

- Create signal performance dashboards

Phase 4: Optimization (Ongoing)

- A/B test signal types

- Refine taxonomy based on win/loss data

- Automate more validation steps

- Expand coverage systematically

When to Build vs Buy

Build in-house if:

- Your market is extremely niche

- Existing vendors don't cover your specific technical segment

- You have engineering resources and strong data culture

- Proprietary signal creation is a competitive advantage

Partner with specialists if:

- Speed to market matters more than perfect fit

- Your RevOps team is lean

- The vendor has proven depth in your specific vertical

- You can combine vendor data with internal enrichment layers

Hybrid approach (most common):

- Use vendors for broad coverage and baseline enrichment

- Build custom layers for unique signals and taxonomy

- Keep validation and truth-testing in-house

Common Pitfalls to Avoid

- Over-indexing on AI/automation before defining what matters

- Claude and other LLMs are great at parsing and routing

- They can't tell you what signals actually predict revenue

- Start with taxonomy and signal design, then automate

- Treating this as a marketing project

- This is RevOps infrastructure

- It impacts sales velocity, expansion motions, and product feedback loops

- Governance needs to sit with Revenue Operations, not demand gen

- Ignoring data decay

- Technical roles change faster than other B2B personas

- Tool adoption shifts quarterly in fast-moving categories

- Budget for ongoing refresh, not one-time enrichment

- Mistaking coverage for quality

- 100,000 generic contacts < 10,000 ICP-verified, context-rich contacts

- Optimize for match rate to ICP, not database size

Success Metrics: What Good Looks Like

Data health:

- 80%+ of ICP contacts have technical context populated

- Signal refresh cycle < 30 days for active contacts

- < 5% invalid employment records in active pipeline

GTM performance:

- 30%+ improvement in SDR qualification rate

- 20%+ faster time from MQL to SQL

- 15%+ higher win rate on signal-sourced opportunities

- Measurable reduction in "not a fit" disqualifications

Team adoption:

- Sales reps reference technical context in 70%+ of discovery calls

- Marketing builds campaigns by technical segment, not just title

- CS uses tool usage context for expansion plays

The Strategic Shift

You're not just buying better data. You're building a competitive moat.

When your GTM data infrastructure mirrors how your market actually operates — how work flows, how tools are chosen, how budgets are allocated — you stop competing on price and start competing on understanding.

Your sales cycles get shorter because you're talking to the right people about the right problems.

Your marketing gets more efficient because you're not wasting budget on irrelevant audiences.

Your product team gets better signal about what to build next.

This is infrastructure work. It's not glamorous. But it's the difference between scaling cleanly and hitting a GTM ceiling at $20M ARR.

Next Steps

- Audit your current data stack against this framework

- Identify your biggest gap (taxonomy, signals, context, or validation)

- Build a business case for the infrastructure investment

- Start with one technical segment and prove the model

- Expand systematically as you validate ROI

The companies winning in vertical and technical B2B aren't just better at sales. They've architected their entire GTM data layer around how their buyers actually work.

That architecture starts in RevOps.